AI seems to have a branding problem. Even "artificial intelligence" doesn't really have a useful definition, so when it comes to the nitty-gritty of specific companies, models, and apps, all bets are off.

Example: lots of people talk about OpenAI, ChatGPT, and GPT as if they're the same thing (they're not).

OpenAI is an AI research company.

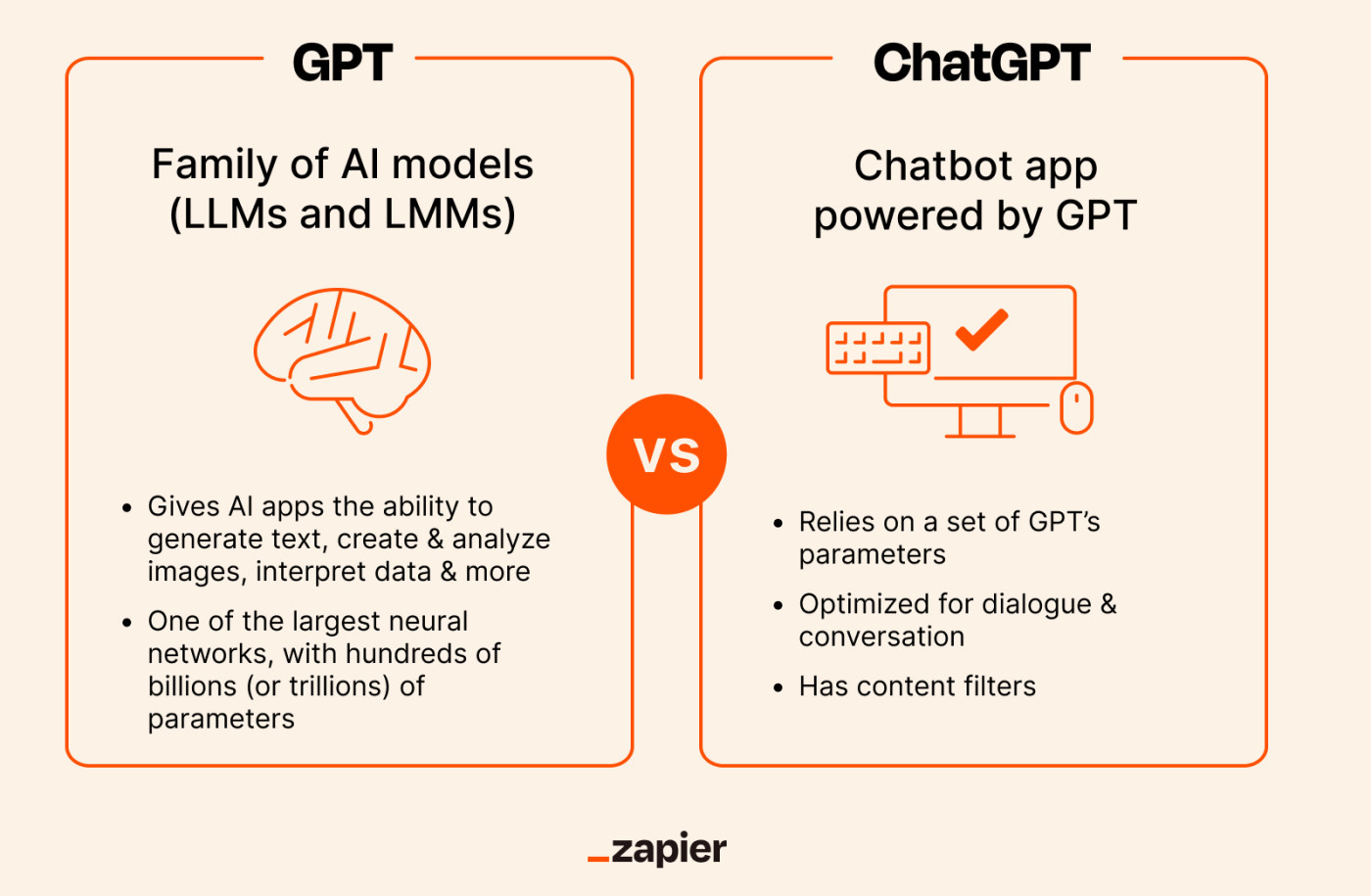

GPT is a family of AI models built by OpenAI.

ChatGPT is a chatbot that uses GPT.

Here, I'm focusing on GPT.

What is GPT?

GPT is a family of AI models built by OpenAI. It stands for Generative Pre-trained Transformer, which is basically a description of what the AI models do and how they work (I'll dig into that more in a minute).

The latest GPT model, GPT-4, is the fourth generation, although various versions of GPT-3 are still widely used. Until recently, GPT was only LLMs (large language models), but with the release of GPT-4o in May 2024, it now includes LMMs (large multimodal models), too.

While I'll frequently use ChatGPT as an example in this article, it's important to remember that GPT is more than just ChatGPT—it's an entire family of models.

What does GPT do?

GPT models are designed to generate human-like responses to a prompt. Initially, these prompts had to be text-based, but the latest versions of GPT can also work with images and audio inputs (because GPT-4o is multimodal).

This allows GPT-based tools to do things like:

Answer questions in a conversational manner (with text or voice chat)

Generate blog posts and other kinds of short- and long-form content

Edit content for tone, style, and grammar

Summarize long passages of text

Translate text to different languages

Brainstorm ideas

Create and analyze images

Answer questions about charts and graphs

Write code based on design mockups

And that's just the tip of the LMM.

Examples of tools using GPT in the background

As you can imagine, GPT is used in a wide variety of different applications. The most well-known is ChatGPT, OpenAI's chatbot, which uses a fine-tuned version of GPT that's optimized for dialogue and conversation.

But because GPT has an API, lots of developers have been building on top of it. Here are some other places where GPT is working behind the scenes:

Microsoft products. Bing's AI search and Microsoft Copilot (all the AI features Microsoft is adding to Word, Excel, and its other Office apps) have GPT working in the background.

Sudowrite is a GPT-powered app designed to help people write short stories, novels, and other works of fiction. In fact, many (if not most) AI writing generators use GPT as at least one of the models powering the app.

Duolingo is a language learning app that lets you have a conversation with a GPT-powered chatbot in your target language.

Zapier uses GPT in the background for many of its AI capabilities, including its AI chatbot builder and AI assistant, Zapier Central.

In short, if you can think of a situation where generating high quality, human-like text could help, GPT can probably be employed to do it—and likely already is.

Other AI models, like Google's Gemini, Meta's Llama, and Anthropic's Claude, are becoming more popular for developers, but GPT was the first widely available effective AI API that developers could use to power their own apps, so you'll see it almost everywhere you look—and most places you don't look, too.

How did we get to GPT?

In the mid-2010s, the best performing AI models relied on manually-labeled data, like a database with photos of different animals paired with a text description of each animal written by humans. It was a process called "supervised learning," and it was used to develop the underlying algorithms for the models.

While supervised learning can be effective in some circumstances, the training datasets are incredibly expensive to produce. Even now, there just isn't that much data suitably labeled and categorized to be used to train LLMs.

Things changed with BERT, Google's LLM introduced in 2018. It used the transformer model—first proposed in a 2017 research paper—which fundamentally simplified how AI algorithms were designed. It allows for the computations to be parallelized (done at the same time), which means significantly reduced training times, and it makes it easier for models to be trained on unstructured data. Not only did it make AI models better; it also made them quicker and cheaper to produce.

From there, the first version of GPT was documented in a paper published in 2018, and GPT-2 was released the following year. It was capable of generating a few sentences at a time before things got weird. While both represented significant advances in the field of AI research, neither was suitable for large-scale real-world use. That changed with the launch of GPT-3 in 2020. While it took a while—and the launch of ChatGPT—to really take off, it was the first truly useful, widely available LLM.

And that's why GPT is the big name in LLMs right now, even though it's far from the only large language model available. Plus, OpenAI continues to upgrade—most recently, with GPT-4o.

How does GPT work?

"Generative Pre-trained Transformer model" is really just a description of what the GPT family of models does, how they were designed, and how they work.

I'm going to use GPT-3 as an example because it's the model that we have the most information about. (Unfortunately, OpenAI has become a lot more secretive about its processes over the years.)

GPT-3 was pre-trained on vast amounts of unlabeled data. It was basically fed the entire open internet and then left to crunch through and draw its own connections. This technique is called deep learning, and it's a fundamental part of machine learning, which is how most modern AI tools are developed.

It's important to keep in mind that GPT doesn't understand text quite the same way as humans do. Instead of words, AI models break text down into tokens. Many words map to single tokens, though longer or more complex words often break down into multiple tokens. GPT-3 was trained on roughly 500 billion tokens.

All this training is used to create a complex, many-layered, weighted algorithm modeled after the human brain, called a deep learning neural network. It's what allows GPT-3 to understand patterns and relationships in the text data and tap into the ability to create human-like responses. GPT-3's neural network has 175 billion parameters (or variables) that allow it to take an input—your prompt—and then, based on the values and weightings it gives to the different parameters (and a small amount of randomness), outputs whatever it thinks best matches your request.

GPT's network uses the transformer architecture—it's the "T" in GPT. At the core of transformers is a process called "self-attention." Older recurrent neural networks (RNNs) read text from left-to-right. Transformer-based networks, on the other hand, read every token in a sentence at the same time and compare each token to all the others. This allows them to direct their "attention" to the most relevant tokens, no matter where they are in the text.

Of course, this is all vastly simplifying things. GPT can't really understand anything. Instead, every token is encoded as a vector (a number with position and direction). The closer together that two token-vectors are, the more closely related GPT thinks they are. This is why it's able to process the difference between brown bears, the right to bear arms, and ball bearings. While all use the string of letters "bear," it's encoded in such a way that the neural network can tell from context what meaning is most likely to be relevant.

Another important note: that's all just describing LLMs. Now that GPT offers a multimodal model (GPT-4o), things are different. In addition to an unimaginable quantity of text, multimodal models are also trained on millions or billions of images (with accompanying text descriptions), video clips, audio snippets, and examples of any other modality that the AI model is designed to understand (e.g., code). Crucially, all this training happens at the same time.

It's a lot, I know, and that's just a basic overview. For more details on how AI, LLMs, and GPT work, check out these articles:

Is GPT safe?

Given that GPT is trained on the open internet, including a lot of toxic, harmful, and just downright incorrect content, OpenAI has put a lot of work into making it as safe as possible for people to use.

OpenAI calls the process "alignment." The idea is that AI systems should align with human values and follow human intent, not do their own thing and go rogue. A big part of this is a process called reinforcement learning with human feedback (RLHF).

The basics of it are that AI trainers at OpenAI created demonstration data showing GPT how to respond to typical prompts. From that, they built an AI reward model with comparison data. Multiple model responses are ranked, so the AI can learn what are and aren't appropriate responses in given situations. The AI was then trained using the reward model and fine-tuned to give helpful responses. (This is also how ChatGPT was optimized to respond to dialogue.)

While no system is perfect, OpenAI has done a very good job of making GPT as helpful and harmless as possible—at least when people aren't actively trying to get around its guardrails.

How to try GPT for yourself

Again, GPT is hiding in all sorts of apps, but here are a few ways to use it right now:

ChatGPT. The simplest way to try GPT is through ChatGPT. While it's only one implementation of GPT, it's a great way to explore what the models can and can't do.

OpenAI playground. If you want to peek behind the curtain a little more, try the OpenAI playground, where you can fine-tune all sorts of settings.

AI writing generators. Most AI text generators use GPT behind the scenes (some are less transparent about it than others). These tools are designed to generate longer-form text (usually for content creators) rather than respond like a chatbot.

AI productivity tools. All sorts of AI productivity tools, from AI meeting assistants to AI note-taking tools, use GPT, so if there's a category of app you use a lot, do some digging to see if any apps in the category have features that are powered by GPT.

Zapier. Zapier integrates with OpenAI and ChatGPT. That allows you to access GPT directly from all the other apps you use at work. Learn more about how to automate ChatGPT, or get started with one of these pre-made workflows.

Start a conversation with ChatGPT when a prompt is posted in a particular Slack channel

Create email copy with ChatGPT from new Gmail emails and save as drafts in Gmail

Generate conversations in ChatGPT with new emails in Gmail

Zapier is the leader in workflow automation—integrating with 6,000+ apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization's technology stack. Learn more.

Pick the route that seems easiest—or most fun—for you, and you'll quickly get a feel for the power of GPT.

Related reading:

This article was originally published in October 2023. The most recent update was in May 2024.