You're probably saying to yourself, "There's no way they could have migrated thousands of ingresses in a few short weeks," and you're absolutely right! When the Kubernetes community announced that ingress-nginx was going end-of-life, we were already knee deep into migrating Zapier's ingress layer. Some might call that good planning but, like they said in The Wire, "Sometimes it's better to be lucky than good." The EOL announcement validated what we had already realized: our ingress story needed a rewrite.

Why move to Gateway API?

Years of tech debt, unsolved

Over several years of growth, we had accumulated a patchwork of Classic Load Balancers, Application Load Balancers, Network Load Balancers, and service specific ingress controllers. It worked, but it was noisy, hard to maintain, and increasingly risky to continue operating as is. Each development, staging, and production cluster had its own flavor of ingress: multiple controllers, mixed annotations, and differing health check semantics. The result: Lots of configuration drift between controllers, and the occasional "works in staging, 503s in production."

In the meantime, Gateway API reached v1 back in 2023 and since has a growing number of stable implementations actively being developed on by the larger community. Ingress remains frozen to this day and it was clear to us that Gateway API is going to be the future traffic routing in Kubernetes.

Migrating to Gateway API presented us a number of opportunities to start anew with a clean slate:

Clear separation of responsibilities (Gateways, Routes, Policies)

Lintable YAML over annotation chaos

Richer routing, observability, and security primitives

Better positioned to take advantage of new feature developments and bug fixes

More importantly, it aligned with Zapier's goal of developer autonomy. Traffic configurations like Envoy Gateways BackendTrafficPolicies live alongside app manifests in source control, so teams can own their traffic just as they own their code.

How we selected Envoy Gateway

In March 2025, the Infrastructure team began evaluating every realistic Gateway API implementation. We looked at Istio Gateway, Gloo Gateway, NGINX Gateway, HAProxy Gateway, Traefik, and Envoy Gateway. The goal was simple: replace ingress-nginx with something modern, reliable, and scalable without introducing unnecessary complexity.

After several proof-of-concepts and side-by-side comparisons, we chose Envoy Gateway. A few reasons made the decision clear.

Gloo Gateway locked important functionality behind enterprise licenses.

Istio Gateway would have likely turned this migration into a service mesh migration resulting in more complexity for little gain.

HAProxy's Gateway API feature set wasn't mature enough for our needs.

NGINX Gateway and Traefik were also too early. Both were promising, but neither had the depth, stability, or policy support we needed to migrate hundreds of services.

This left us with Envoy Gateway. Envoy is a proven data plane. It has a long track record in high scale environments, a strong community, and a steady release cadence. We needed something battle tested, and Envoy has been that for years. Envoy Gateway hit the balance: simple control plane, strong Gateway API support, fast development, and a clear extension path through external authorization, traffic policies, and filters. It gave us what we needed today and a platform we could build on for years.

The migration

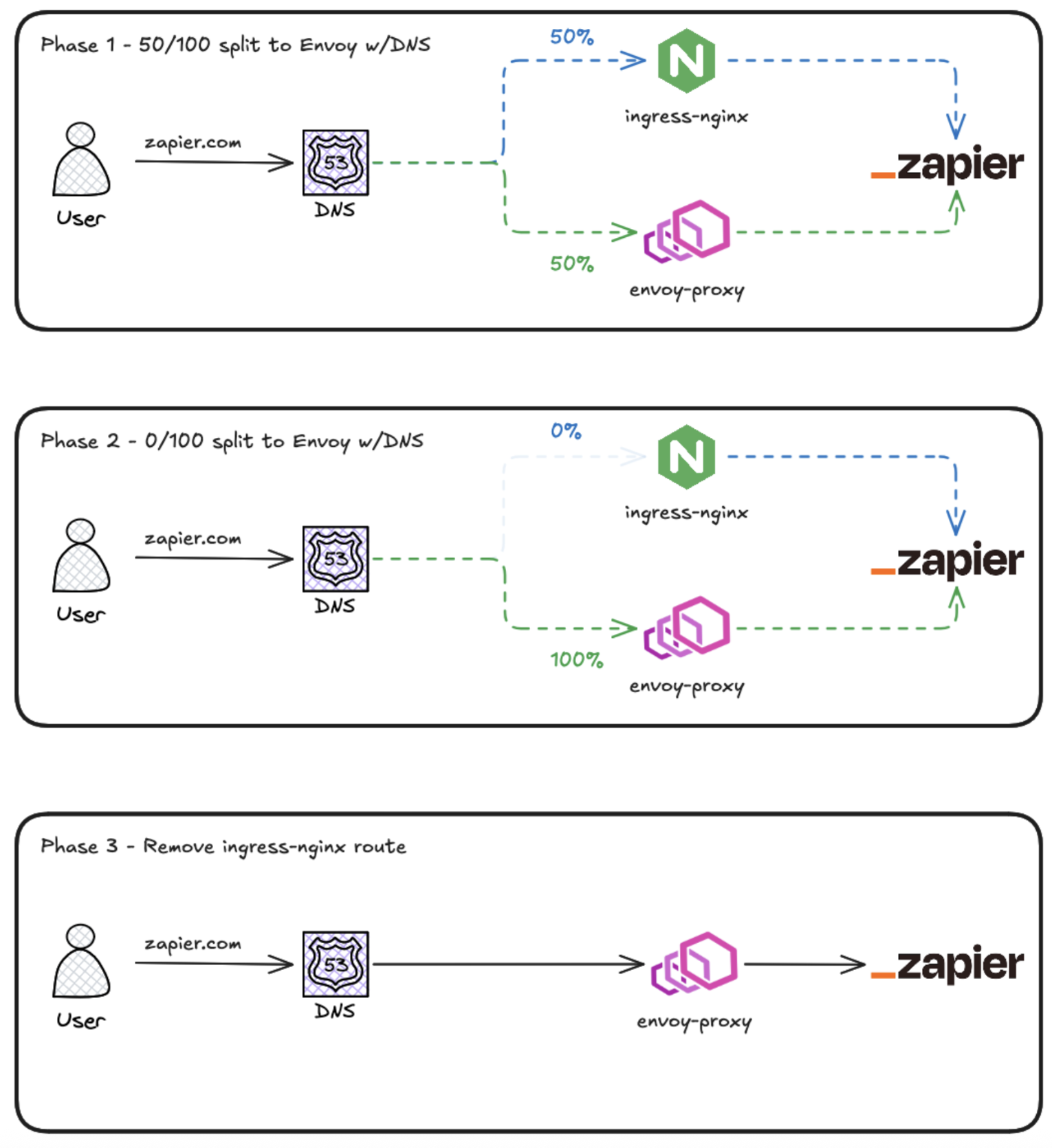

We approached the migration like any other large refactor: incremental, observable, and reversible. Every migration should be easy to roll back to minimize potential impact.

Using internal tracking sheets and a long trail of Jira tickets, we batched hundreds of ingress resources, prioritized high impact services, and rolled them out behind ArgoCD managed Helm charts. Each batch was tracked, monitored, and validated with end to end testing before being promoted to production.

Weighted DNS was our friend. It allowed us to gradually shift traffic and observe metrics and traces to see if anything unexpected appeared.

We chose to migrate services in groups. First in staging, followed by a bake-in period, then in production. This progressive rollout kept service environments aligned and made debugging simpler. An "migrate all staging - > waiting period -> migrate all production" rollout would have left services running on mixed setups for too long and made troubleshooting burdensome.

When theory met reality

Then came the fun part… debugging everything that did not show up in the planning docs. Some of the earliest challenges were surprisingly fundamental. We had to build and standardize our own Helm charts for xRoutes, since many third-party services still only ship with Ingress based templates. Migrating nginx annotations to Envoy configuration was not always straightforward, and tooling like ingress2gateway did not work for us at the time. It does look like more tools to ease migration work are being released, but during this migration we were very much on our own.

We also had to develop a deep understanding of nginx's default behavior and how it differed from Envoy's. Assumptions that had been true for years no longer applied, and small differences in defaults like connection handling, buffering, health checks, proxy behavior could create very real issues if left unaddressed.

Once we were past the basics, the real debugging began.

The case of the five-second ghosts

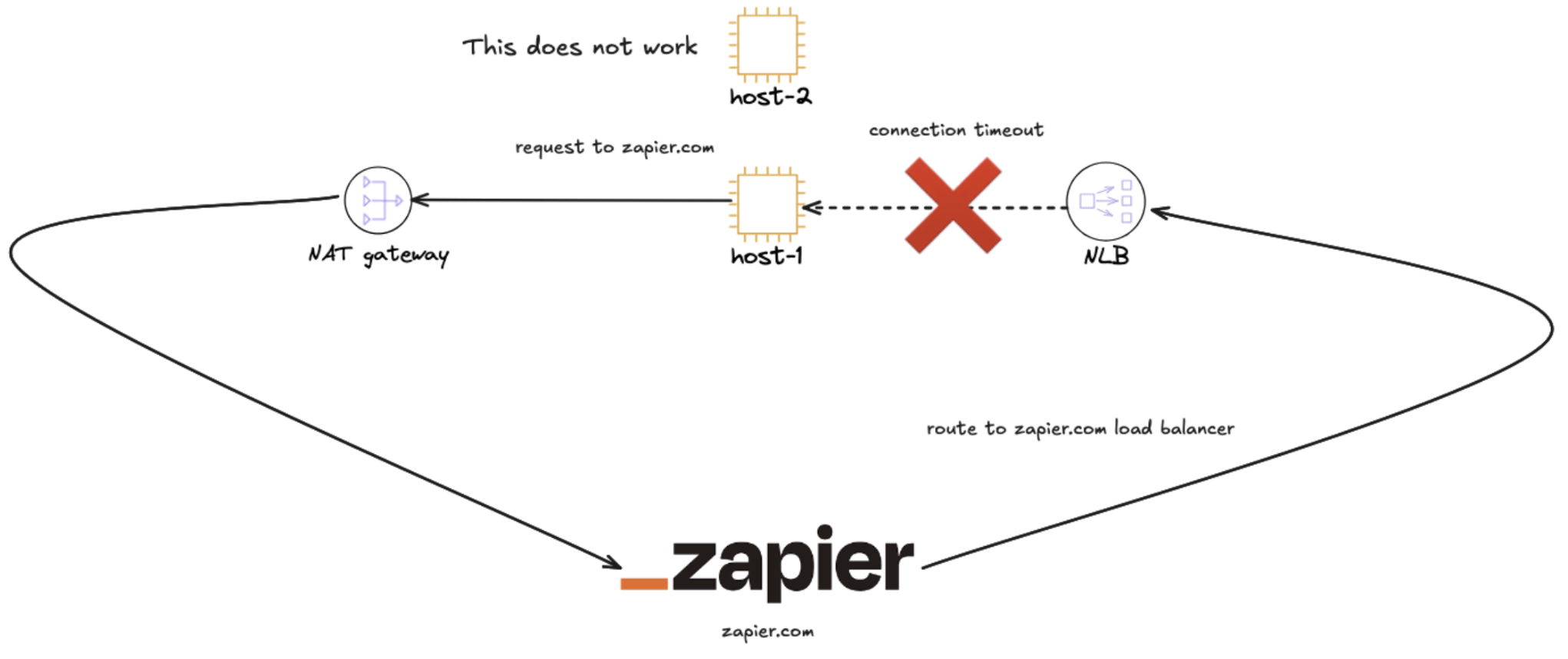

Mid rollout, we started seeing sporadic 500s that almost always came in pairs about five to six seconds apart. Synthetic tests could not reproduce the issue, only real traffic did. After tuning NLB health checks, adjusting termination drains, and digging into Gunicorn and Gevent keep alive behaviour, we finally found the culprit buried in an AWS document:

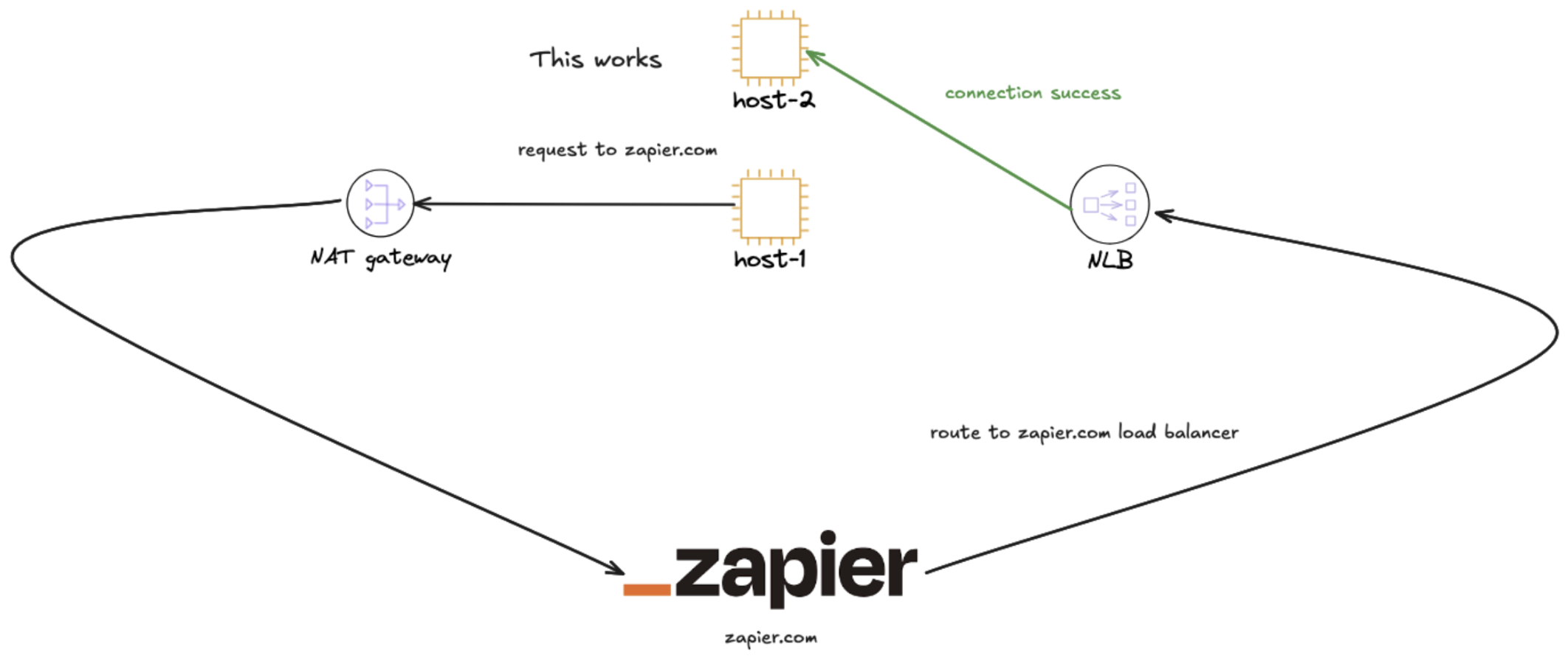

If client IP preservation is enabled and the request is routed to the same instance it originated from, the connection times out, even if the pods have different IPs.

Our Envoy Network Load Balancer configuration had client IP preservation turned on while nginx did not. We enabled client IP preservation on our AWS NLB because we wanted to continue tracking the real client IP via the X-Forwarded-For header, and at the time we were not yet using Proxy Protocol. This ensured Envoy could forward the original client IP without configuration changes.

However, when both the caller and the destination service landed on the same EC2 instance, the preserved client IP caused the Linux kernel's connection tracking to interpret traffic incorrectly, eventually resulting in the packets being dropped at the Load Balancer. See the figure below:

If the request however managed to hit a different EC2 node the request would succeed.

The solution:

Disable client IP preservation.

Enable Proxy Protocol v2 across all NLBs.

Patch Envoy readiness checks so they understand proxy protocol payloads

Validate that X-Forwarded-For continues to append correctly.

After the fix we saw a short rollout blip and then silence. Problem solved, and a simple reminder that sometimes the issue lives deep in the network stack.

The quiet 503 problem

Not every mystery was about memory or routing. For more than a week, the team chased down a steady stream of 503 errors on the backend that powers Webhooks by Zapier. The rate was low but persistent, and it only appeared after moving the service to Envoy Gateway.

We explored every angle. We tuned connection timeouts and TCP keepalives, experimented with pod routing, ran load tests in staging, enabled detailed access logs, and reviewed worker and proxy settings. None of it explained the pattern.

A few Envoy metrics helped narrow our focus:

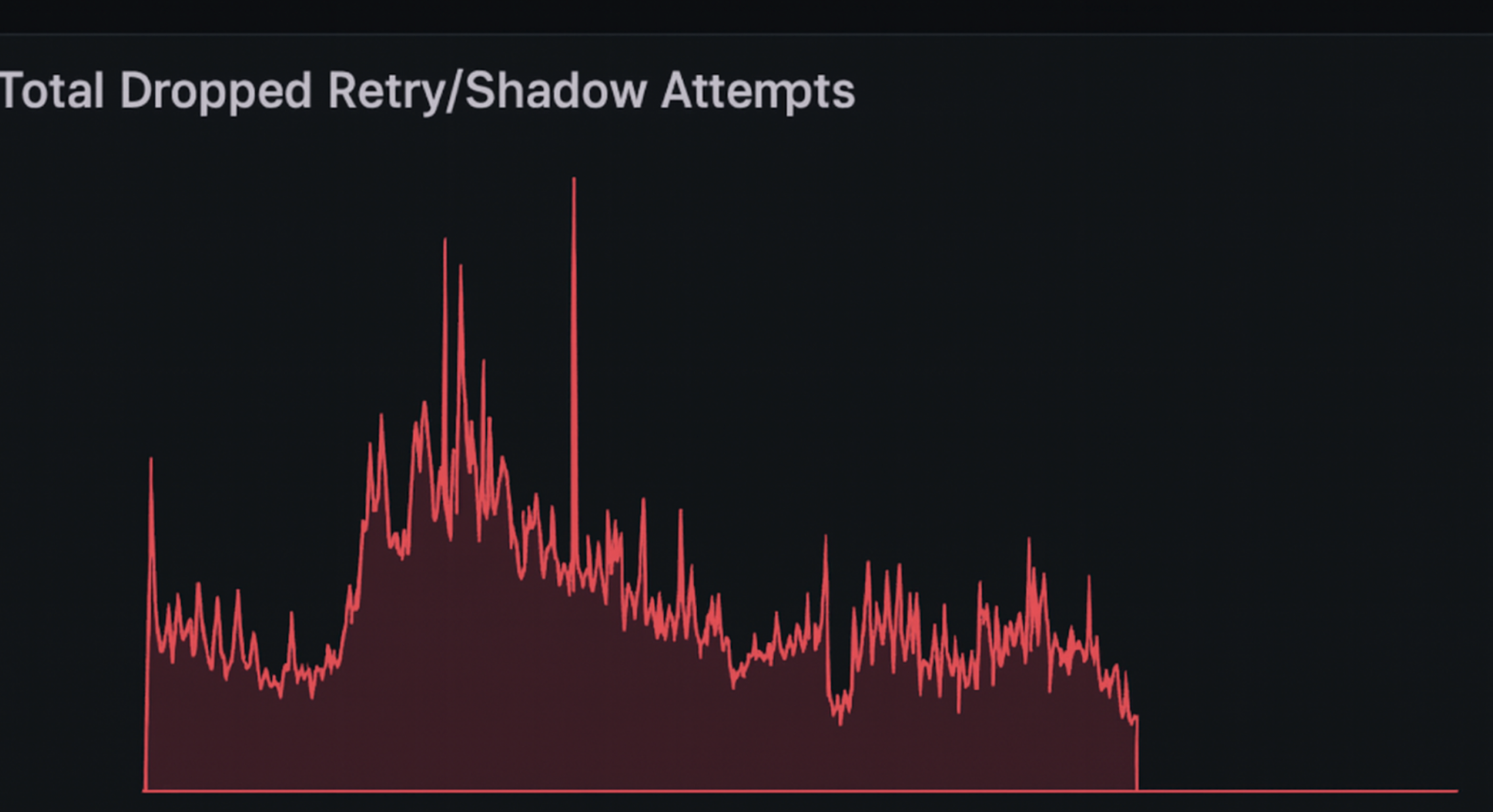

envoy_cluster_retry_or_shadow_abandoned This metric counts the number of retries or shadow requests Envoy had to abandon because the buffer limit was reached. Any rise here suggests that request bodies are too large for the configured buffer size.

envoy_cluster_upstream_rq_retry This metric tracks how often Envoy is retrying requests. It helped confirm that some retries were behaving as expected while others were never getting off the ground.

envoy_cluster_upstream_cx_destroy_remote_with_active_rq This metric counts cases where an upstream connection is closed while a request is still active. It helped us rule out upstream instability as the cause.

Together, these metrics showed a clear pattern: some retries were being attempted, but others were abandoned before they reached the upstream service. That pushed us to look earlier in the retry flow. The breakthrough came when we discovered that the failures only occurred on large webhook payloads. Envoy's default connection buffer limit was not large enough to hold certain request bodies during a retry. When a retry was triggered, there was not enough room to buffer the payload, so Envoy dropped it and returned a 503.

The solution:

Increasing the connection.bufferLimit in the ClientTrafficPolicy gave Envoy enough space to safely buffer and retry large webhook requests.

After rolling out the change across all environments, the abandoned retries stopped and the hooks service returned to normal behaviour. This was a good reminder that retries do not work in isolation. They depend on the system having enough room to hold the data that makes a retry possible in the first place.

Reconciliation woes

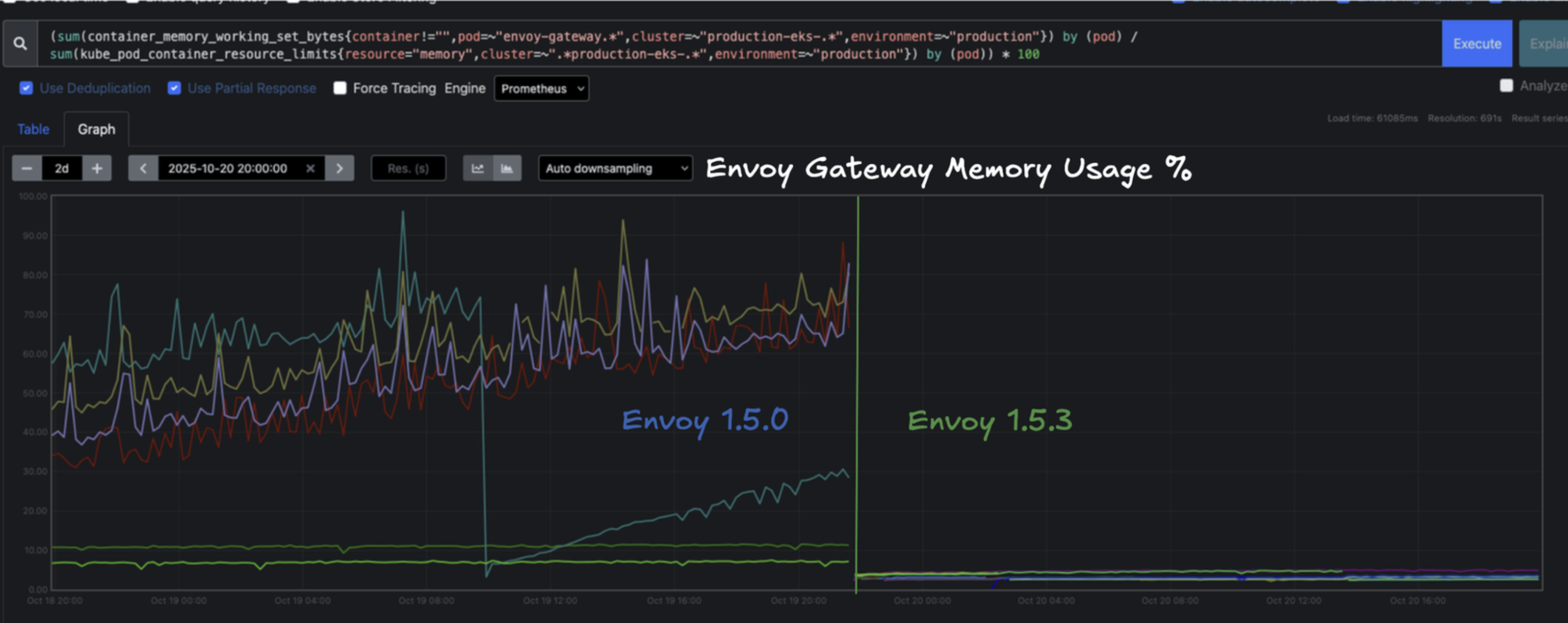

In early September, we noticed Envoy Gateway pods in our development cluster repeatedly hitting out of memory limits. Grafana showed more than 100,000 reconciliation events in a cluster that should have been mostly idle. Something in the Gateway controller was looping far too often.

Profiling the control plane with pprof revealed that the BackendTrafficPolicy merge logic was the main culprit, or so we thought. Each reconciliation triggered expensive JSON merge patches and deep copy operations that consumed gigabytes of memory.

The solution:

After deeper profiling and event tracing, we found the reconcile loop wasn't random, it was the Vault operator repeatedly refreshing the vault‑tls Secret in every namespace. Each Secret update expanded to a full Envoy Gateway reconciliation across all monitored resources, resulting in increased memory usage and restart rates. Envoy must watch Secrets (TLS on Gateways, OIDC/JWT creds, Backend/ClientTraffic policies, BackendTLSPolicy CAs), which meant that every change by the vault-operator was very expensive.

The fix turned out to be removing a single wildcard in the Vault Operator configuration:

caNamespaces:

- "*"

Once we dropped the global CA distribution, secret updates fell to normal levels, reconcile counts returned to baseline, and controller memory stabilized in the cluster, and no more OOMs on the control plane. It was a good reminder that control‑plane tuning matters as much as data‑plane performance, and sometimes stability comes from removing just one asterisk.

Performance improvements along the way

From planning to execution, this migration took close to a year. Over that time, we upgraded Envoy Gateway several times, and each release gave us more confidence that we had picked the right horse.

We started on Envoy Gateway 1.3 and as of current we are on 1.5.6, with plans to move to 1.6.x soon. The frequent updates and steady performance gains have been encouraging. Our staging environments are particularly demanding. With dozens of ephemeral lab deployments running at once, we churn through a large number of HTTPRoutes, policies, and supporting services. Early on, this created pressure on the Gateway control planes, and we saw frequent out-of-memory (OOM) errors on the pods.

The upgrade from 1.5.0 to 1.5.6 changed that entirely. Remember our conundrum above around JSONMerge and high memory usage? Well that's now a thing of the past. Memory usage dropped from a consistent 80% average to below 20%, and we have not seen a related OOM event since on the control plane. These improvements make it easier to trust Envoy Gateway as the foundation for everything we run behind it. It's fast, stable, and improving with every release.

Looking forward: Visibility and smarter routing

Stability was step one. Step two is insight and control.

We wrote an in-house Authz service which acts as the external authorization layer for Envoy Gateway. This allows us to enrich incoming requests with customer context.

Request enrichment

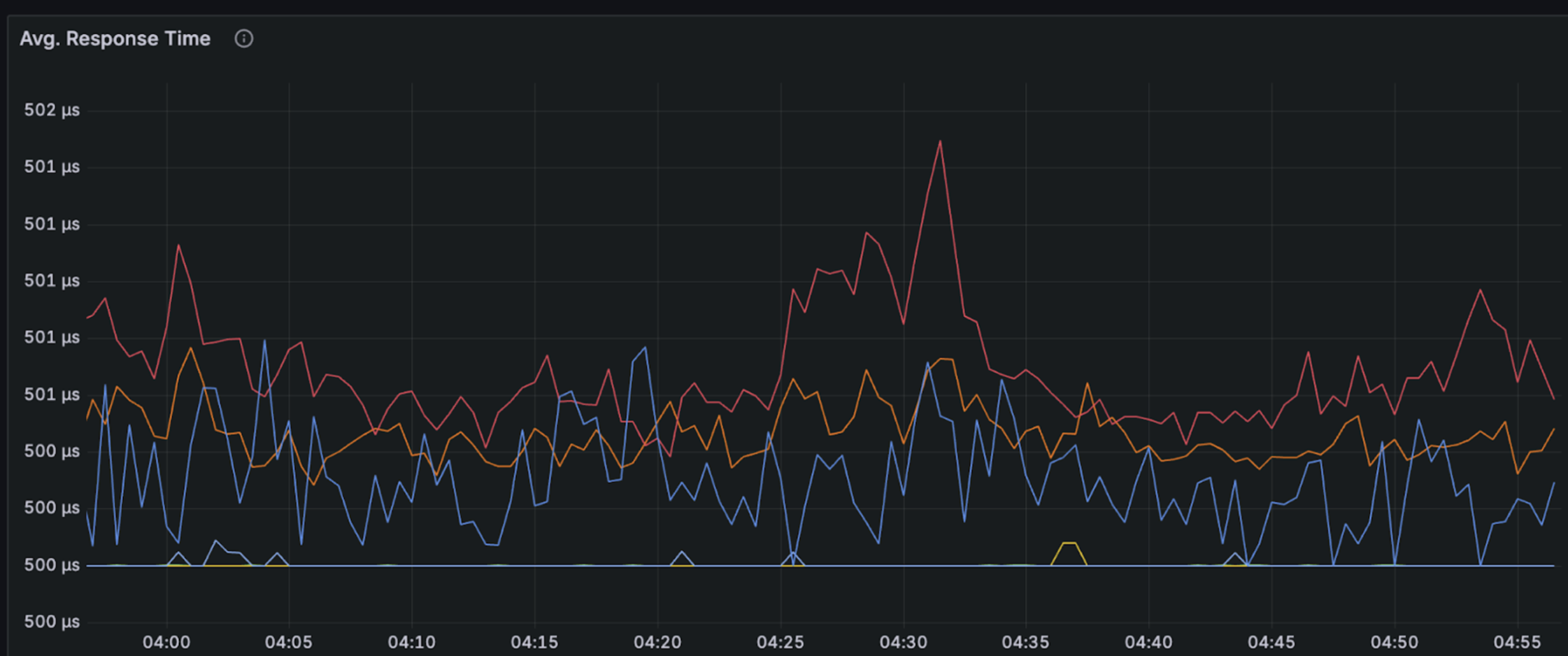

Our service connects to Envoy through the ext_authz interface which then allows us to add context-bearing headers before passing the request through. Those headers are trusted because the proxy strips any client supplied versions thanks to ClientTrafficPolicy settings, ensuring only we can set them. The entire process introduces sub-millisecond latency per request while serving tens of thousands requests per second.

How it improves routing

With our Authz service in place, routing decisions can depend on verified identity and context rather than just request paths. Routes can require certain headers before forwarding traffic; rate limits and region selection can vary per account or user. By moving these decisions to the edge, we make routing faster, safer, and easier to reason about. Applications no longer need to implement custom JWT logic or network filters. Envoy and Authz handle it once, consistently, for every service. It gives Zapier a clear picture of who is using what, and it gives us the ability to shape that traffic intelligently.

Clear SLOs and better signals

With NGINX we never had meaningful SLOs, and that's not NGINX's fault, but when you are rebuilding your system, you usually like to do it better the first time around. So that's why we spent the effort with Envoy Gateway, to create SLOs that now measure Envoy's actual behaviour, not the health of everything behind it. The signals are cleaner and much easier to trust. If Envoy fails to route traffic, or stops responding, the SLOs catch it. If something downstream is having problems, Envoy's SLOs stay green allowing Engineers to redirect focus on where the problem truly lies.

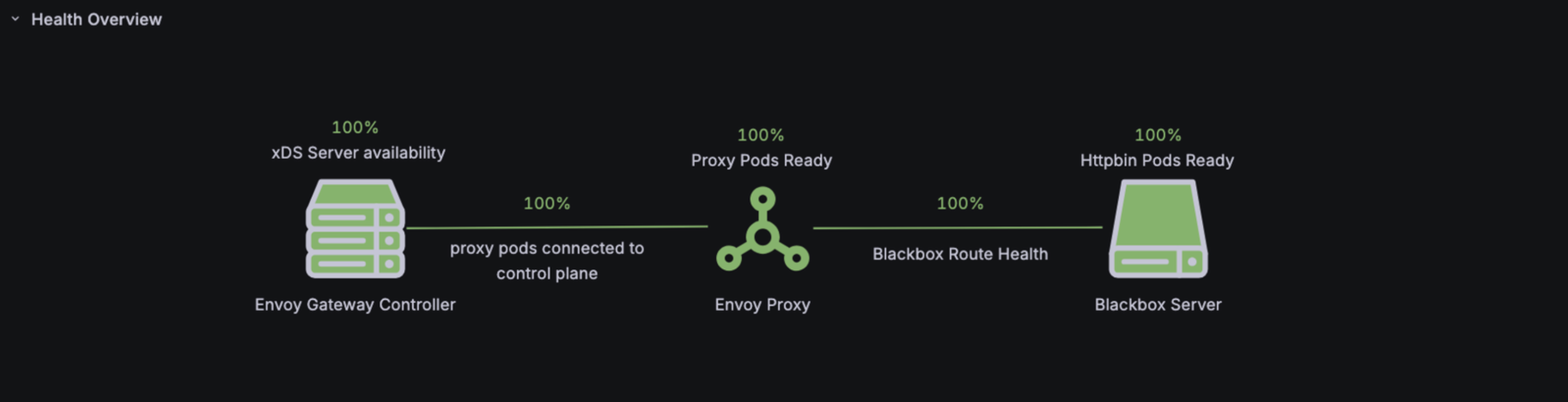

We also introduced a dedicated Envoy Gateway Health Dashboard, powered by k6-blackbox probes, that shows at a glance whether Envoy Proxy is healthy across every cluster and environment. If someone reports that "Zapier is returning 5xxs everywhere," this is one of the first places an SRE checks. If the dashboard is green, the issue is likely to be at a different layer in the stack. If it lights up red an incident will automatically be triggered paging in the on-call Site Reliability Engineers to resolve the situation.

Our processes are still evolving, but these tools already give us something we never had before: reliable, direct insight into the health of our ingress layer, independent from everything downstream of it.

Gateway by the numbers

Envoy Gateway now powers all inbound and cross-service traffic at Zapier, and the numbers speak for themselves. The migration gave us a unified view of performance, and for the first time, we can see the entire ingress surface in a single, consistent set of dashboards.

Below is a snapshot of what the platform looks like today.

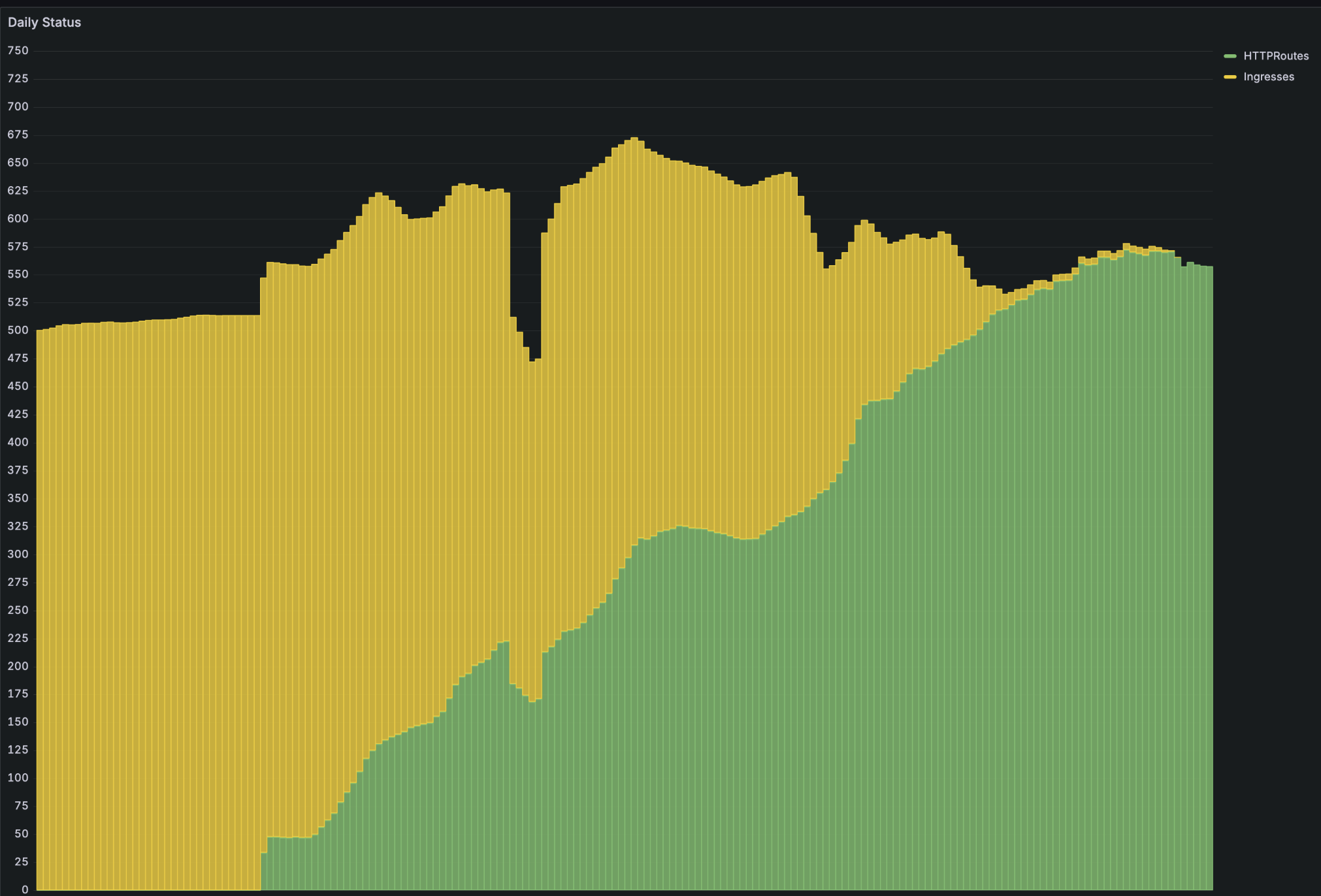

Ingress to HTTPRoutes in production

In less than 6 months, we migrated all ~500 ingresses over to httproutes in production.

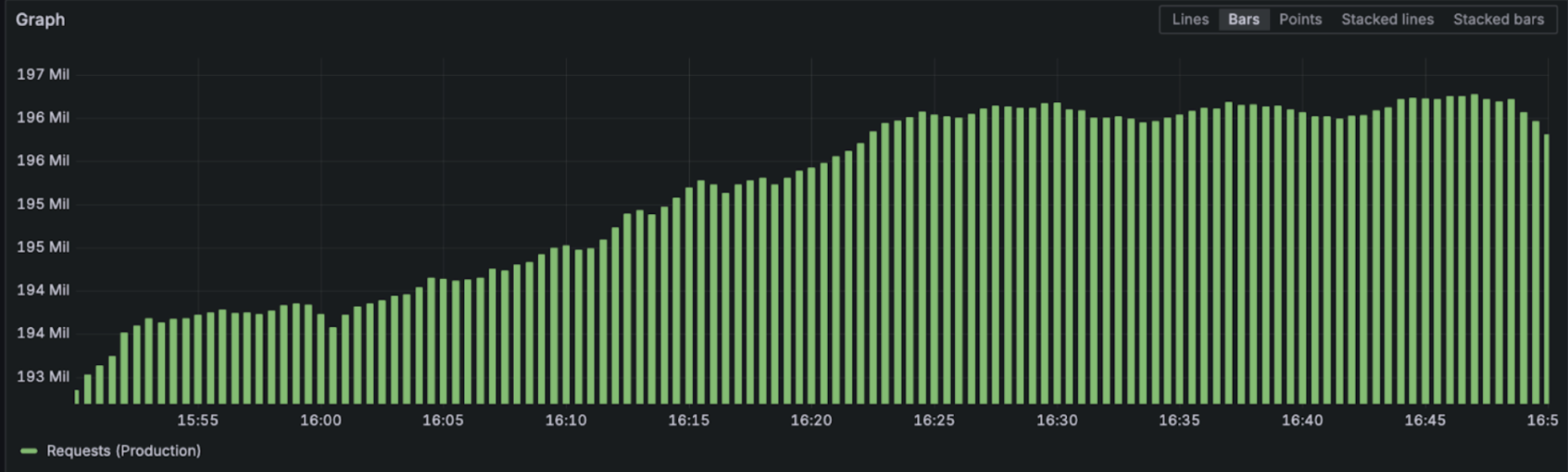

Overall traffic at a glance

Across all production gateways we're pushing 200 million requests per hour / 63,000 requests per second.

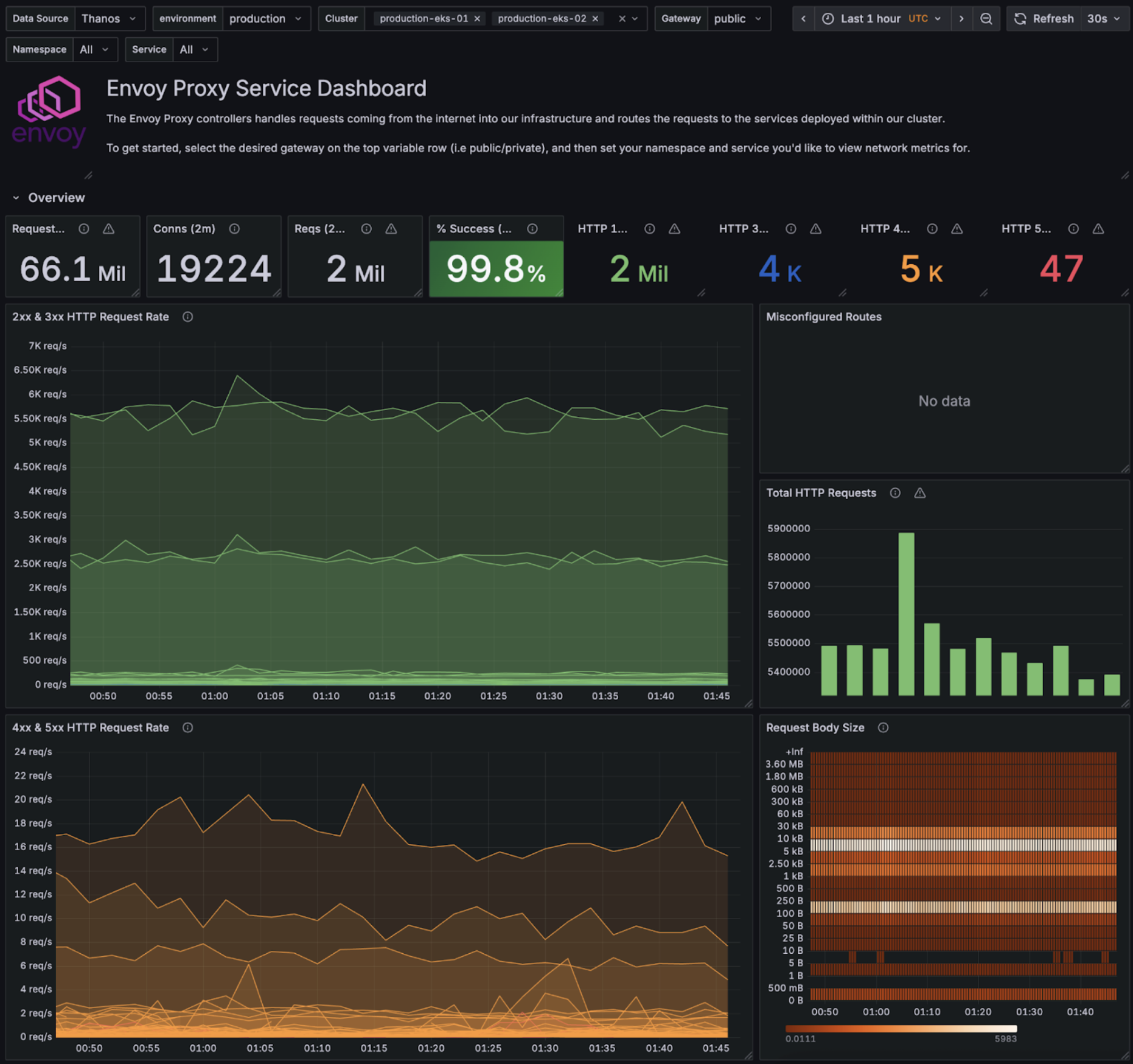

Here is an example of a dashboard that engineering teams can use to explore their service in detail and see precisely how traffic is performing over time.

A unified vision

The migration gave us something bigger than a new ingress layer. It gave us consistency. Every service behind Envoy Gateway now benefits from the same routing model, the same observability signals, and the same policy framework. There is one set of metrics, one source of truth for access logs, and one control plane that defines how traffic flows through Zapier. For the first time, we have a single dashboard that lets us understand the entire edge at a glance. From the edge to the service, the same data powers visibility, rate limiting, retries, and incident response.

This consistency is a major step toward our broader goal of consolidating tooling and reducing toil. Teams no longer need to maintain their own ingress quirks or build custom metrics pipelines. Reliability, security, and observability come by default. The migration also unlocked capabilities that were previously out of reach but most importantly aligned how we deploy, observe, and operate systems at the edge. We can move faster, debug smarter, and continue scaling without the sprawl. The work will continue, but we now have a solid foundation and a clear path forward.

Acknowledgments

This migration succeeded because of the incredible work of many people across Zapier. This project was ambitious and sometimes chaotic, but it was also a genuine cross-team effort. The platform we ended up with reflects the best of Zapier's engineering culture: collaboration, curiosity, and a willingness to keep digging until we find the real answer.

Dave Winiarski

For leading many of the technical decisions of the migration and tackling some of the most obscure and challenging issues along the way. Many of the breakthroughs in this project happened because Dave refused to give up on the hard problems.

Alec Hinh

For building the dashboards and observability tooling that made it possible to understand the system we were migrating to. Alec's work gave us clarity at moments when everything felt murky.

James Hong

For iterating on our HTTPRoute Helm charts, fixing subtle edge cases, and guiding numerous migrations. James also traced OOM issues in the control plane to noisy upstream secret updates and helped ship the mitigations that stabilized Gateway performance across environments.

Ken Ng

For strengthening our authorization layer by improving our AuthZ service and ensuring it could support the scale and complexity of the migration. Ken also partnered closely with teams during cutovers and contributed directly to the day-to-day work of moving services to Envoy Gateway.

César Ortega

For organizing and tracking the early migration effort with meticulous documentation, Jira coordination, and clear migration guides. César ensured we always knew what had been done and what was next.